Pao Ramen

-

Laurence Tratt: Four Kinds of Optimisation

Apr 23 · tratt.net ⎯ Premature optimisation might be the root of all evil, but overdue optimisation is the root of all frustration. No matter how fast hardware becomes, we find it easy to write programs which run too slow. Often this is not immediately apparent. Users can go for years without considering a program’s performance to be an issue before it suddenly becomes so — often in the space of a single working day.

-

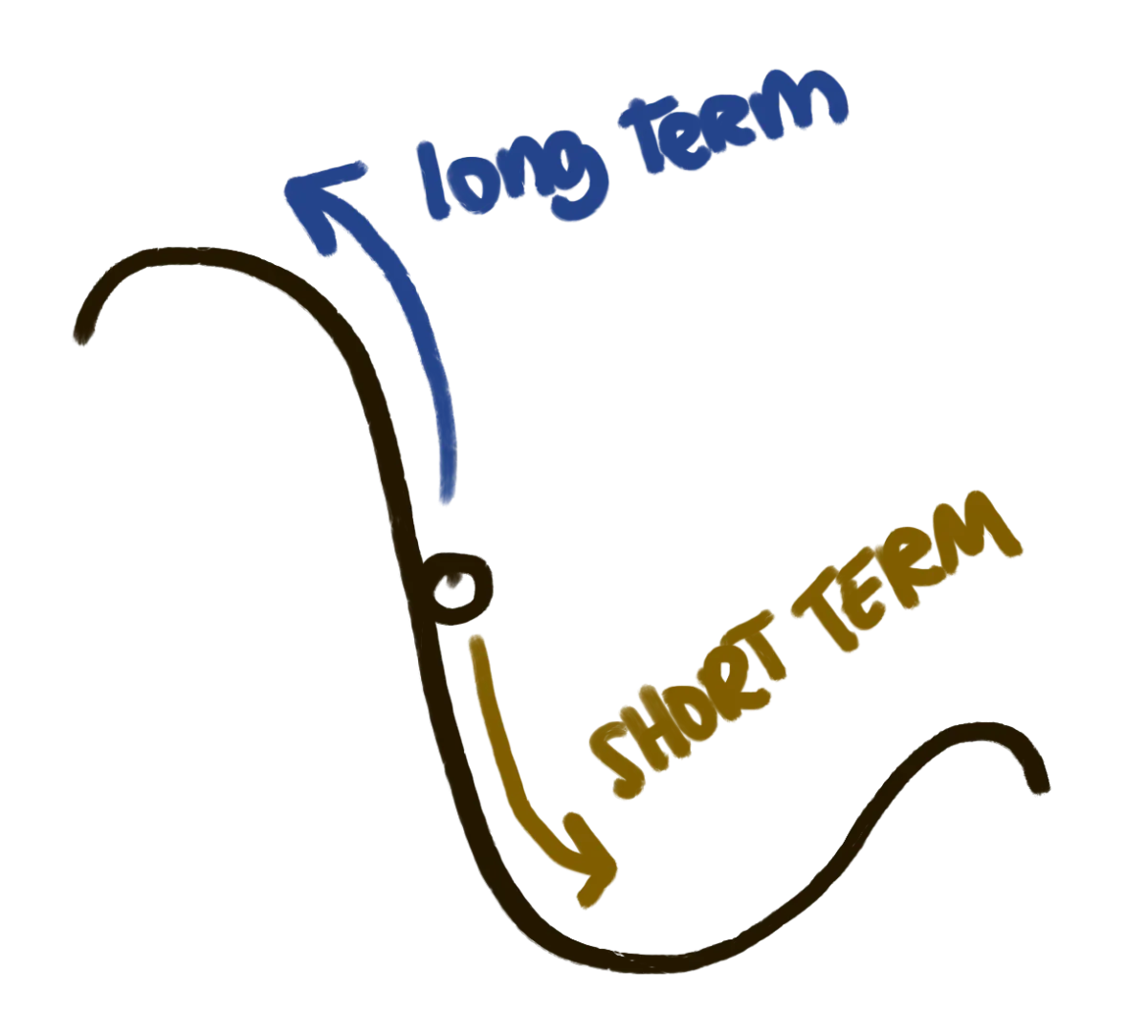

The Law of Shitty Clickthroughs at andrewchen

Apr 22 · andrewchen.com ⎯ Dear readers, I have moved to Substack and I will be writing here from now on: 👉 andrewchen.substack.com In the meantime, I will leave andrewchen.com up for posterity. Enjoy!

-

Ghosting Spotify: A How-To Guide

Apr 20 · littledoor.substack.com ⎯ The Joyful Sabotage Guide to Supporting Real Music and Reigniting Pleasure in the Age of Tech Oligarchy

-

How I Accidentally Triggered A Hacker News Manhunt

Apr 20 · posthuman.blog ⎯ I made it to the front page of Hacker News. Then they tried to cancel me for being a bot. This is the story.

-

Work - Pascal Pixel - Pascal Pixel

Apr 20 · pascalpixel.com ⎯ Portfolio of digital products and design work, including Horse Browser, The Next Web redesign, and various projects focused on creating intuitive, ADHD-friendly interfaces.

-

You’re Only As Strong As Your Weakest Point

Apr 20 · blog.jim-nielsen.com ⎯ Writing about the big beautiful mess that is making things for the world wide web.

-

RisingWave: Open-Source Streaming Database

Apr 19 · risingwave.com ⎯ RisingWave is a stream processing platform that utilizes SQL to enhance data analysis, offering improved insights on real-time data.

-

Defold - Official Homepage - Cross platform game engine

Apr 19 · defold.com ⎯ Defold is a free and open game engine used for development of console, desktop, mobile and web games.

-

Everything You Need to Know About Incremental View Maintenance

Apr 19 · materializedview.io ⎯ An overview of incremental view maintenance, why it’s useful, and how it's implemented.

-

Pablo Picasso's Stunning Repetitions

Apr 18 · jillianhess.substack.com ⎯ “I am the notebook”

-

Passing planes and other whoosh sounds

Apr 18 · www.windytan.com ⎯ Comments

-

Steam Store Graphical Asset Creator

Apr 17 · www.steamassetcreator.com ⎯ Upload 1 artwork and generate all the required graphical assets required for your Steam page in one click.

-

Introducing Kermit: A typeface for kids - Microsoft Design

Apr 16 · microsoft.design ⎯ Using design to empower children by making reading easier, improving comprehension, and helping dyslexics.

-

How to Hire

Apr 16 · hvpandya.com ⎯ A more scalable way to access unproven talent before anyone else.

-

Unsure Calculator

Apr 16 · filiph.github.io ⎯ The Unsure Calculator is an online tool that lets you calculate with numbers you’re not sure about.

-

One Year of Mahjong Solitaire – New and Familiar

Apr 16 · blogs.gnome.org ⎯ I’ve always liked the concept of small five-minute games to fill some time. Puzzle games that start instantly and keep your mind sharp, without unnecessary ads, distractions and microtransactions. Classics like Minesweeper and Solitaire come to mind, once preinstalled on every Windows PC. It was great fun during moments without an internet connection.

-

GitHub - 9Morello/gatehouse-ts: A flexible, zero-dependencies authorization TypeScript library based on the Gatehouse library for Rust

Apr 14 · github.com ⎯ Comments

-

Celebrating 50 years of Microsoft | Bill Gates

Apr 13 · www.gatesnotes.com ⎯ Article URL: https://www.gatesnotes.com/home/home-page-topic/reader/microsoft-original-source-code Comments URL: https://news.ycombinator.com/item?id=43575884 Points: 36 # Comments: 24

-

14 Questions to Audit Your Homepage Messaging (Before It Silently Kills Sales)

Apr 13 · fronterabrands.com ⎯ You know this as a buyer. When you plan to buy a B2B service, you go to that brand’s website. You read the homepage. You try to understand what they do, how they do it, and how they are different. And based on what you see, you form an opinion and make a decision: to… Continue reading 14 Questions to Audit Your Homepage Messaging (Before It Silently Kills Sales)

-

WordSafety.com

Apr 11 · wordsafety.com ⎯ Trying to decide a name for a website, app or other project?